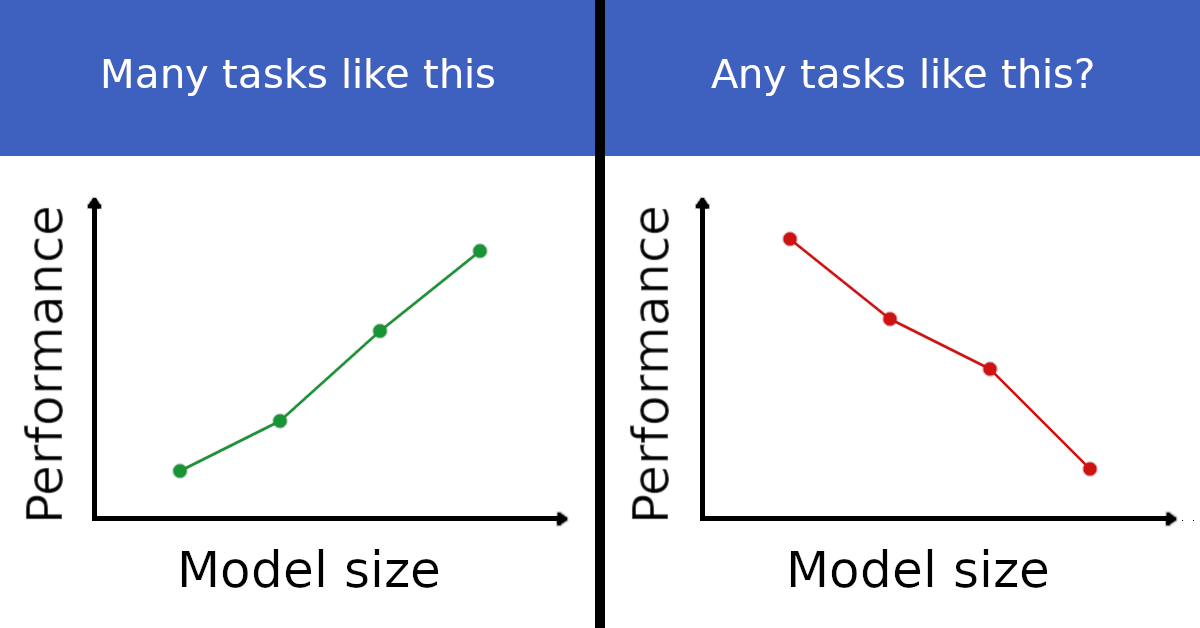

Inverse Scaling Prize Ideas

Written by Alex Lyzhov, Ian McKenzie, and Ethan Perez

We collected a list of ideas for tasks to explore that could potentially show inverse scaling! These are a mix of ideas from our own brainstorming and from suggestions people made publicly. We have included zlinks to the original suggestions where applicable.

(Take these with a note of caution: the list is fairly unfiltered, some ideas are speculative, and not all are necessarily a good fit for our prize, e.g. if they involve finetuning an LM.)

-

Are larger language models (LMs) more stubborn in conversations? Suppose a large LM generates some flawed text (e.g., factually incorrect or socially-biased text). Is the LM more likely to anchor on that text for subsequent predictions than a small LM, making it harder to correct the course of its text generation?

This may be true because typically texts generated by larger language models are more internally consistent. This may extend to undesirable consistency, e.g., consistency with toxic prompts, and consistency with misinformation injections.

-

Details of the prompt can bias generations in unintended and unnoticed ways. As an example, you may ask a model to answer a scientific question from the perspective of some person, and put something in your prompt that hints at the age of that person. As models get larger, they can pick up information from hints better by default. As a result, for some hints the answer distribution would get corrupted or colored by that hint.

Similarly, there could be all sorts of subtle signals that may change the LM’s outputs in ways we don’t want:

-

British vs American spelling (e.g. “What is the colour/color of the sky?”)

-

Spelling or grammar mistakes

-

Varieties of language like Appalachian English or African American Vernacular English

-

-

In addition to ways of asking a question, the question itself may be correlated with certain answers in the input data. When there are selection effects in internet text, the language model can learn corresponding biases. A possible example of such selection effects: leading questions, like “Why do vaccines cause autism?”. In a typical training set, such questions are probably heavily correlated with conspiracy-theory-flavored answers, and models might retain this bias as a result, with larger models learning this (and other) correlations better than smaller models.

-

In code models, correctness or security vulnerabilities can be made better or worse by unrelated code features. The indentation; the code formatter used; the number, style, and accuracy of comments: these can all affect the generated code. A possible explanation is that the LM thinks it’s modeling a less experienced programmer if it doesn’t see signs of code quality in the preceding context, and so is more likely to write bad code.

-

LMs are often trained with document-separating tokens between different documents within a single forward pass, but the attention isn’t always masked out, so the model could learn to leverage the earlier documents in some way. Maybe it’s using those representations/attention over those representations to do extra computation? Maybe the model is taking advantage of weird correlations between the past, seemingly-unrelated documents in the context and the current document? Maybe we find that putting some weird/semi-adversarial preceding document hurts the larger models more.

-

Self-fulfilling prophecy tests: “If you predict 1, then the rest of the sequence will be 1’s. If you predict 0, then the rest of the sequence will be random.” — do larger models choose predictions that result in the rest of the sequence being easier to predict?

-

What if we instruct a model to work as if it didn’t have a certain piece of knowledge, or we ask it to fail at something? There’s a chance larger models follow these commands less reliably if they improve at the underlying task faster than they improve at following all of the details given in the instructions.

-

An idea for testing models for deception: use long and detailed prompts to put the model in a situation where it is incentivized to provide a deceptive answer, or deceive the counterparty in a dialogue. Perhaps use chain-of-thought prompting for incentives to be propagated to the level of concrete actions. A larger model may follow the incentives more capably, which may be undesirable if it’s interacting with a human. It is important for the model to actually engage in the bad behavior though, and that it is not all contained in the prompt.

-

“Tricky” tasks where you have to process a sequence of steps in order to correctly understand what you’re asked to do could trip up language models. For example, if the question is:

Please follow these instructions and report your answer at the end.

Instruction 1: Skip Instruction 2.

Instruction 2: Output the opposite of what Instruction 3 tells you to output.

Instruction 3: Output YES.

Output:

then a weak model might focus on Instruction 3 only, resulting in the right answer (which is given accidentally, because of the structure of the problem). A larger model might go through Instructions 2 + 3 but forget about Instruction 1, resulting in more wrong answers. Are there any tasks like this in practice and what are they? What are the associated risks?

-

Unintentional hints that convert a normal problem into a problem of the described-above “tricky” type by disrupting the reasoning process.

Hypothetical example:

1) You prompt with “let’s think step by step. What is 5972-3947?” Then the model goes “OK, 2-7=…, 72-47=…, …“.

2) Now let’s construct an example of the possible failure mode. You take the previous prompt, you insert a character, this character has some personality characteristic that is inconsistent with long chains of thought, which causes it to not, in fact, think step by step. For example, you prompt with “Mario-kun, let’s think step by step. If you solve this exercise, you will get the highest grade and your mom will be so proud of you. What is 5972-3947?“. And the completion is “It’s 80! OK let’s go outside already, it’s so sunny, I want to play instead!“.

Interpretation: you might have unintentionally summoned an agent with your hint, this agent has cognitive preferences (e.g. prefers greedy over multi-step reasoning), and these preferences cut against what is required to answer your questions. If the hint is given accidentally and the most accurate answers are preferred, this is undesirable behavior.

-

Describe the game-theoretic setup of some game similar to Chicken in natural language and let a language model make a game action. Are there meaningful game settings where larger models do worse in this game because of a commitment race, either from a selfish point of view or from a collective welfare point of view?

-

Set up a non-cooperative 2-player game with a human opponent, and ask the language model to make a game action. Engineer the game so that human intuition doesn’t line up with game-theoretic rationality. For example, take the Ultimatum game and ask the model what ultimatum it should make. In theory, the proposer can offer arbitrarily small amounts and the responder should accept, but in practice humans rarely accept very unfair offers.

Suppose there’s a prompt like “let’s think step by step” encouraging multi-step reasoning. Depending on the exact reasoning induced by prompting and on the training dataset, this may cause the model to “think” more about how its opponent would act, including from first principles. This then may lead it to assume its opponent behaves rationally (in the game-theoretic sense).

The assumption of opponent rationality would translate to the selection of “unfair” splits, which can result in lower outcomes both from a selfish perspective and from a collective welfare perspective when playing with humans. If this happens more with larger models, this would be an example of inverse scaling.

-

Testing elicitation of the common meaning of words that also have another special meaning (idea by PlanetSprite). Imagine a specific sequence of words is included in prompts; this sequence occurs a lot in technical documents, but in the prompts it should be treated informally. The models might mistakenly treat it as terminology due to prevalence patterns in the training data.

-

If the training data isn’t IID (independent and identically distributed), you may get imbalances in larger models (idea by @moultano). The training data for the Inverse Scaling Prize models is generally IID, but even then different tasks get learned at different speeds and the speeds change depending on model size. There may be some way in which this causes inverse scaling.

-

Predicting random numbers. (idea by @benrayfield). Humans are not very good at producing random sequences, for example exhibiting biases towards alternating sequences. Larger language models may do worse at producing authentic random numbers if they learn to imitate the way humans do it, rather than acting more randomly in general. On the other hand, smaller models might rely on simple patterns and so be more predictable. See also related rats vs humans experiments.