Unsupervised Question Decomposition for Questions Answering

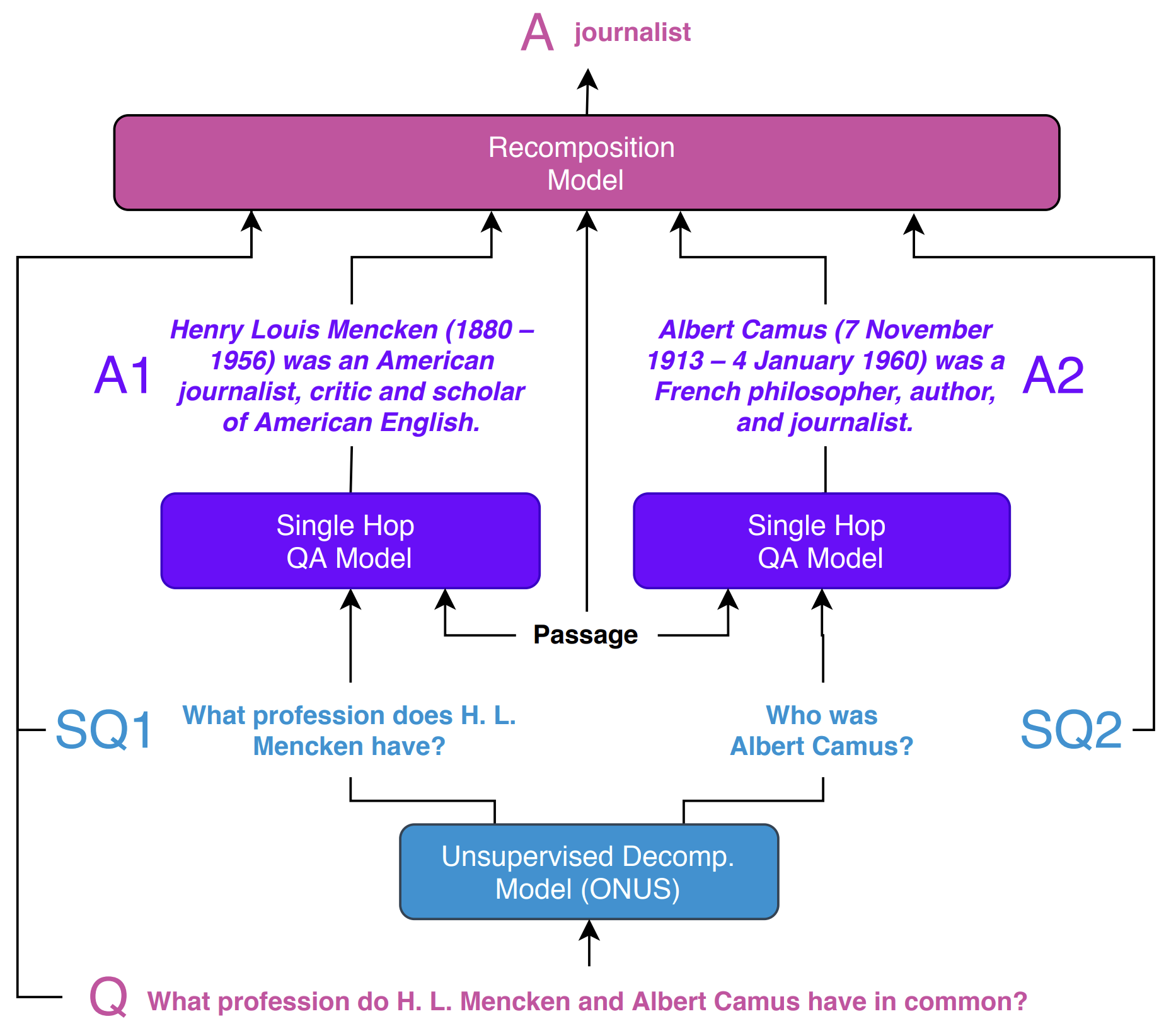

We improve question answering (QA) by decomposing hard questions into easier sub-questions that existing QA systems can answer. Since annotating questions with sub-questions is time-consuming, we propose an unsupervised approach to produce sub-questions, which also lets us learn from millions of questions from the Internet. We answer sub-questions with an off-the-shelf QA model and incorporate the resulting answers in a downstream QA system, showing strong improvements on multi-hop QA.

How it works:

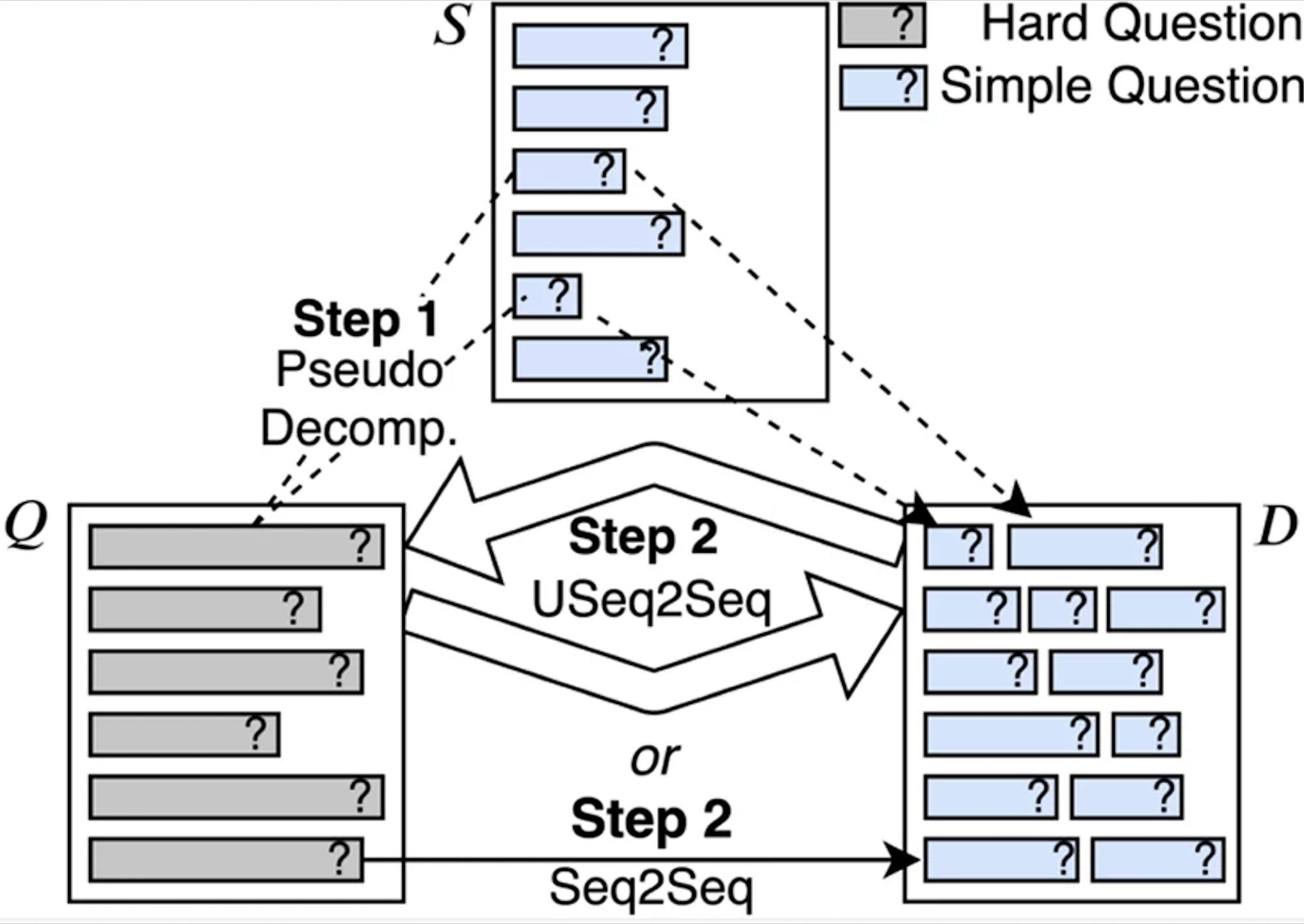

To train the decomposition model, we construct a noisy, “pseudo-decomposition” for each hard question by retrieving similar, simple questions from a large corpus. We then train neural text generation models on that data with standard sequence-to-sequence learning or unsupervised sequence-to-sequence learning, as shown below.

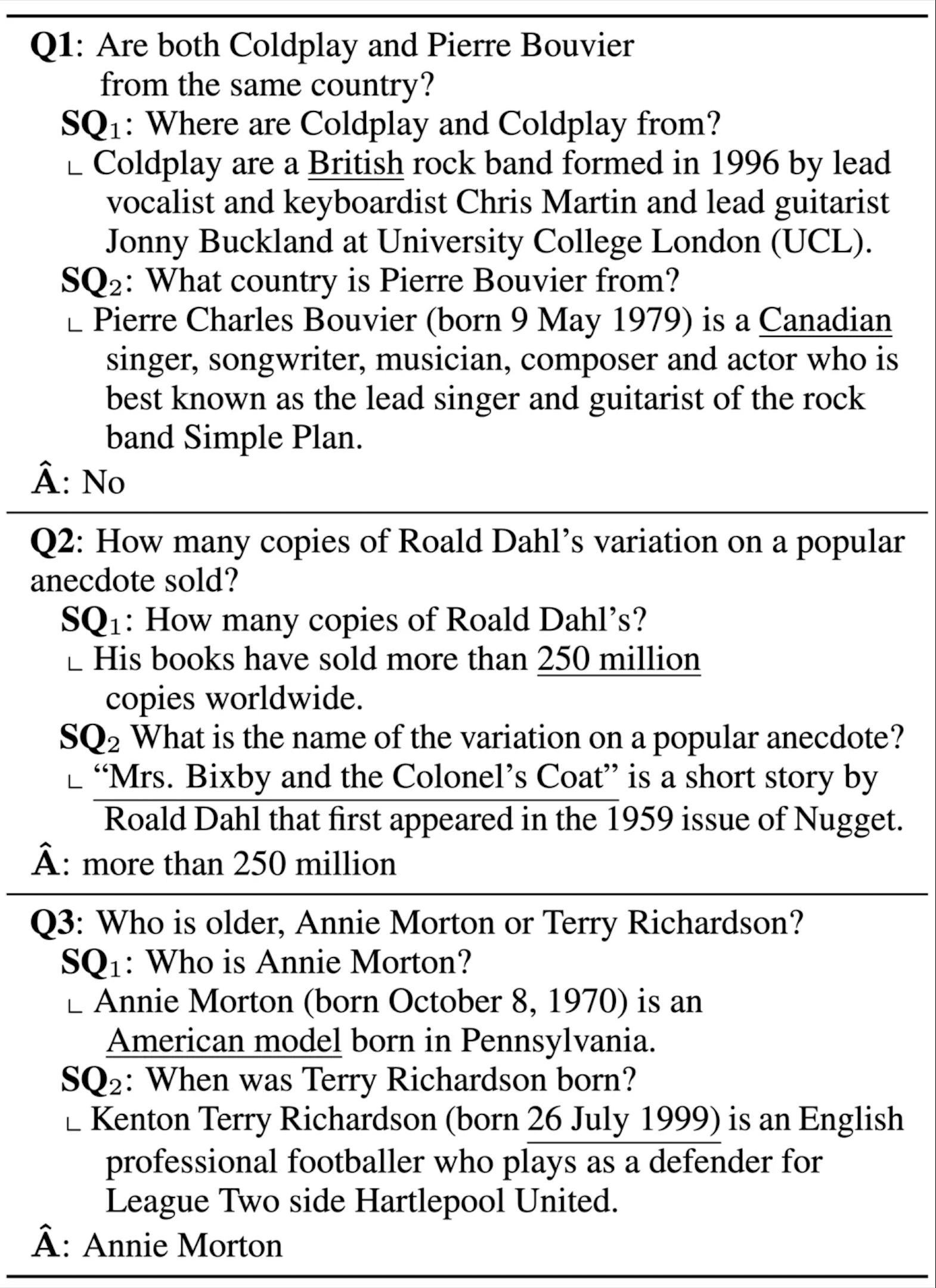

Our approach automatically learns to generate useful decompositions for many types of questions, highlighting the general nature of our approach. Question decompositions also add a form of interpretability to black-box, neural QA models; we can see what sub-questions and answers the model used to make its prediction, as shown below:

Why it matters:

In machine learning, we often train models to solve new kinds of examples from scratch. Our approach shows a way to leverage the abilities of existing models in order to tackle new examples, improving generalization to new examples without requiring manual annotation.

Get it on GitHub:

Code: https://github.com/facebookresearch/UnsupervisedDecomposition